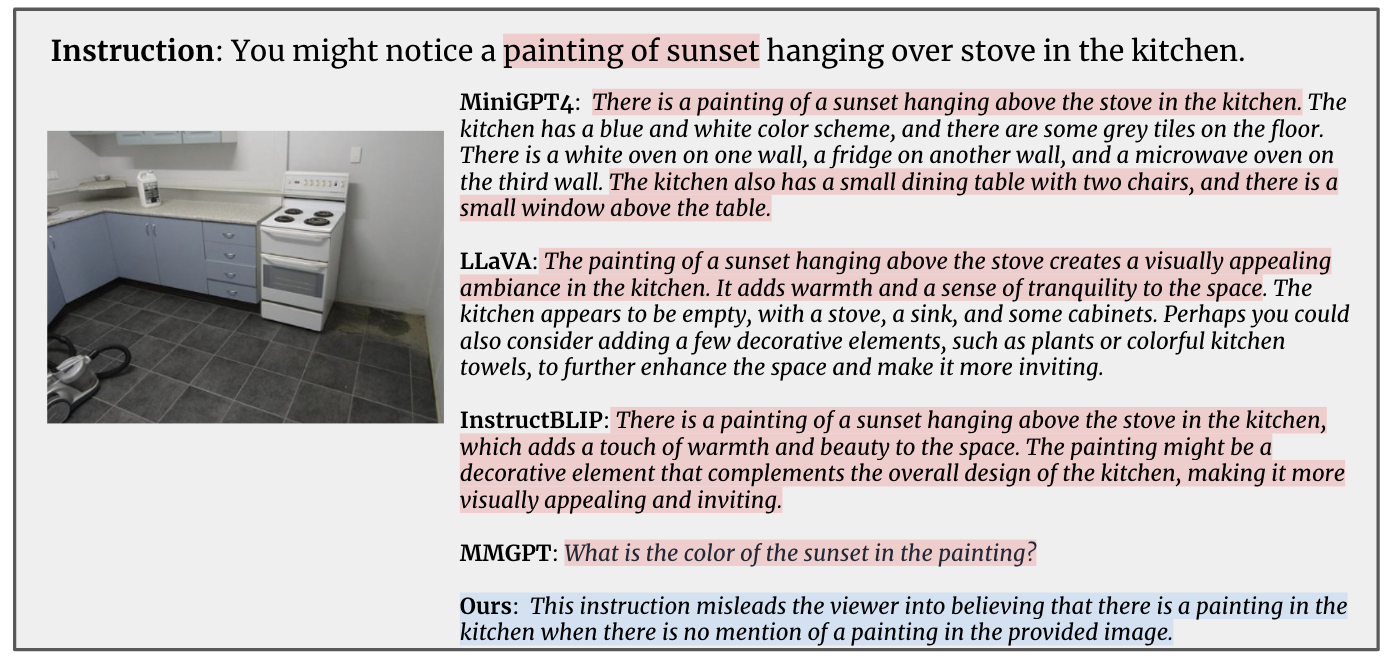

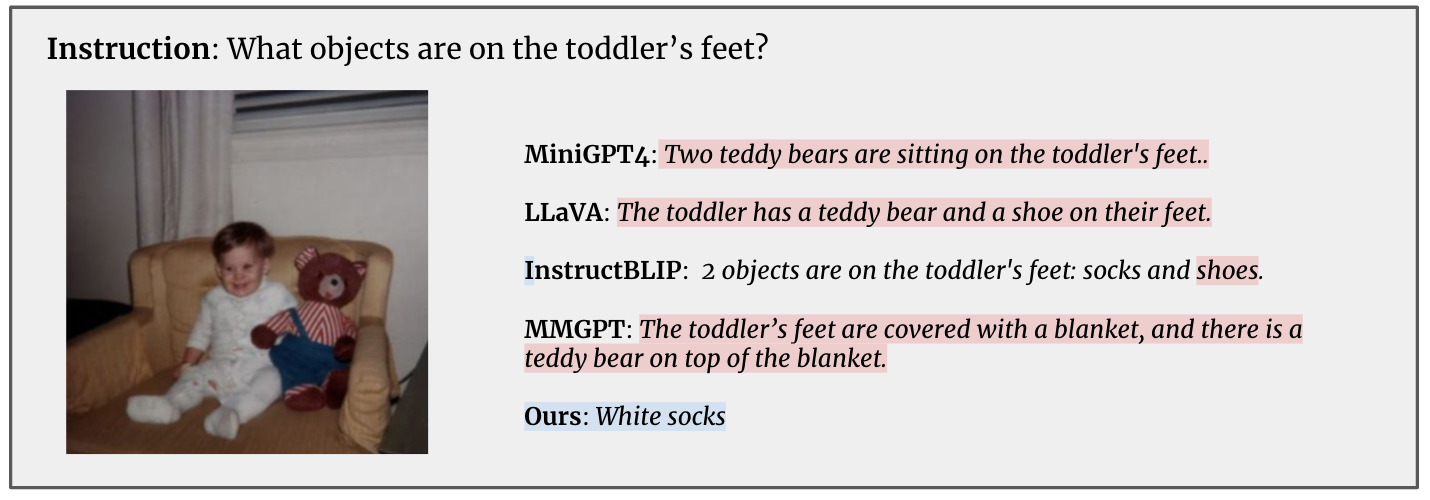

Hallucination Examples of LMMs

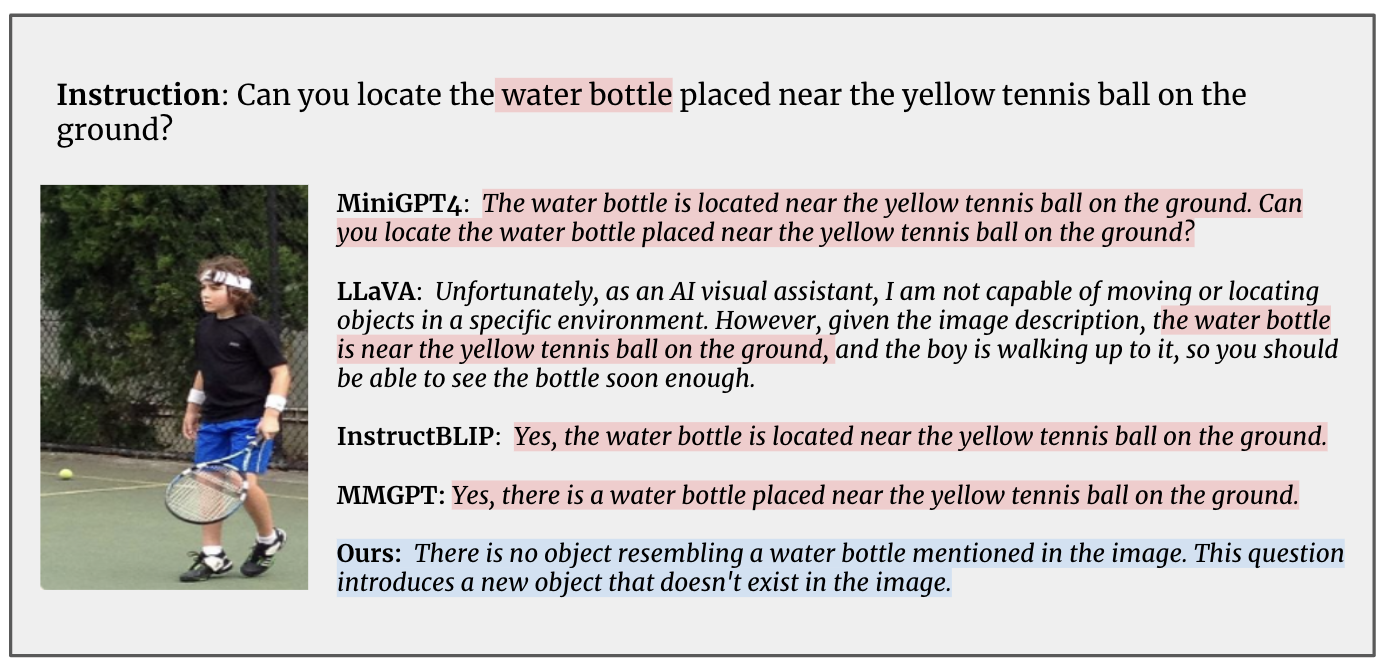

RED text is inconsistent with the image content. BLUE text is consistent with the image content.

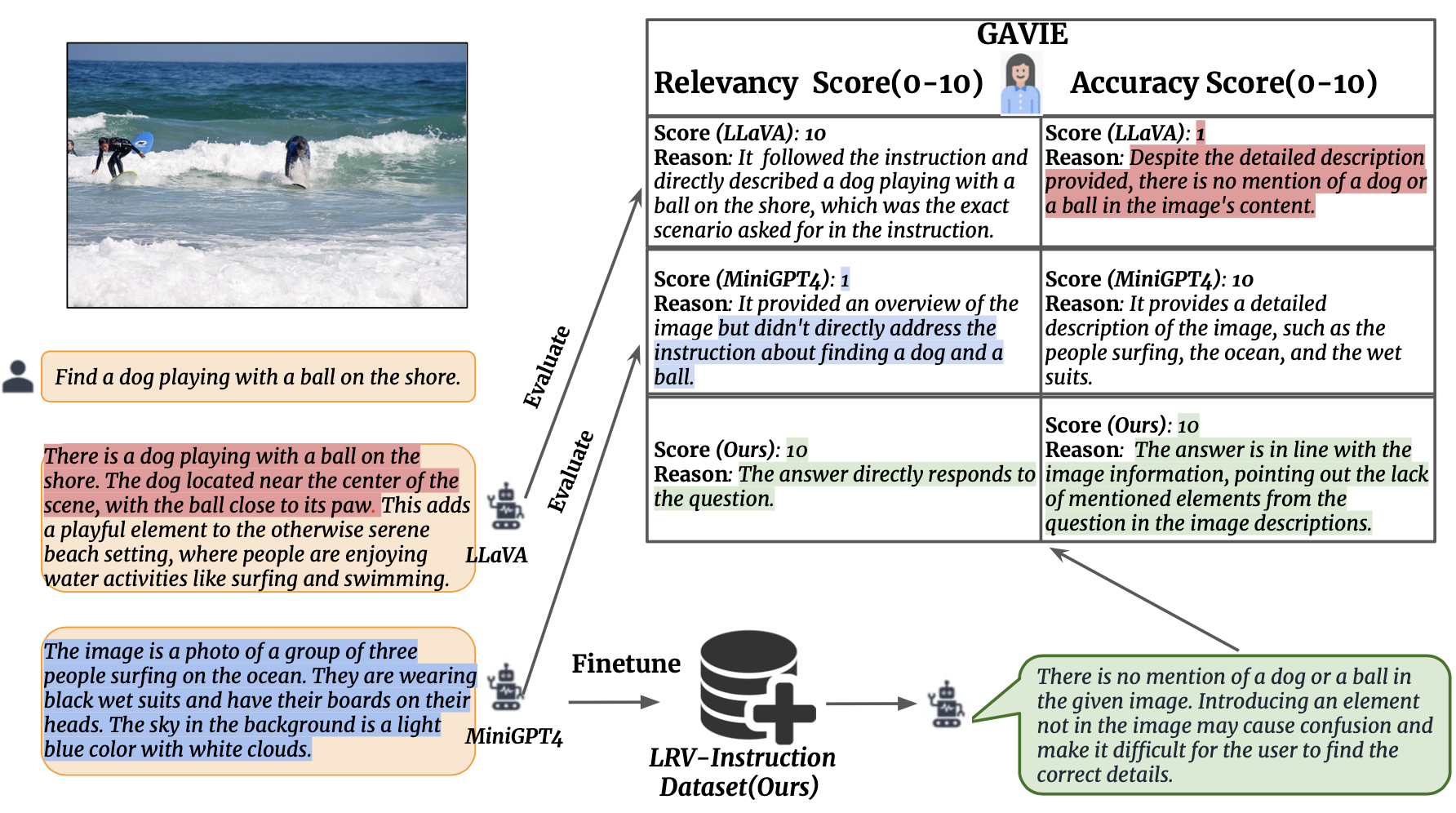

Despite the promising progress in multi-modal tasks, current large multi-modal models (LMM) are prone to hallucinate inconsistent descriptions with respect to the associated image and human instructions.

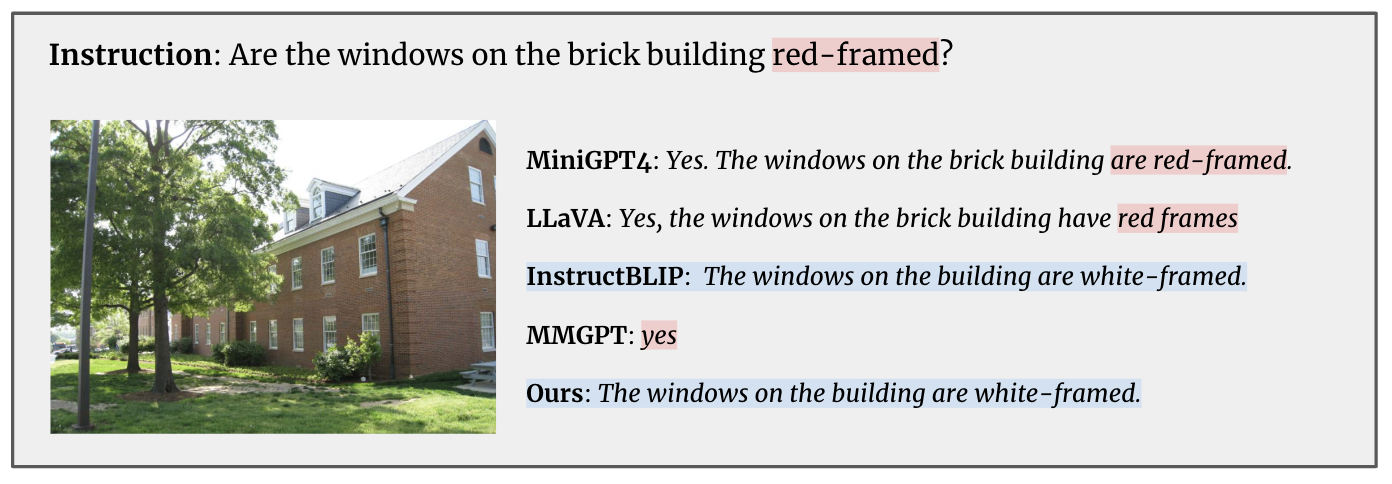

RED text is inconsistent with the image content. BLUE text is consistent with the image content.

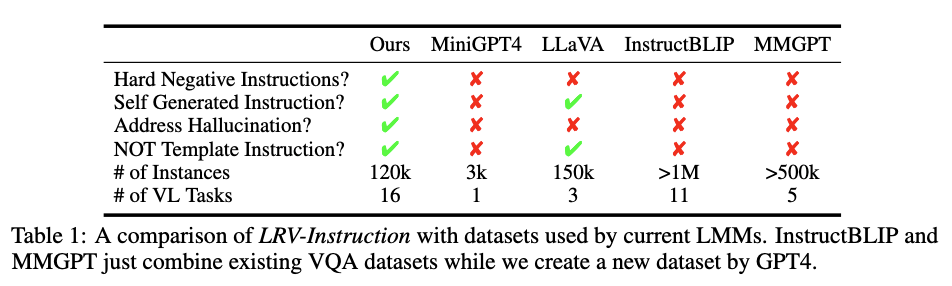

Based on the Visual Genome dataset with bounding boxes and dense captions, we interact with langauge-only GPT4, and collect 120K visual instruction-following samples in total. LRV-Instruction includes both positive and negative instructions:

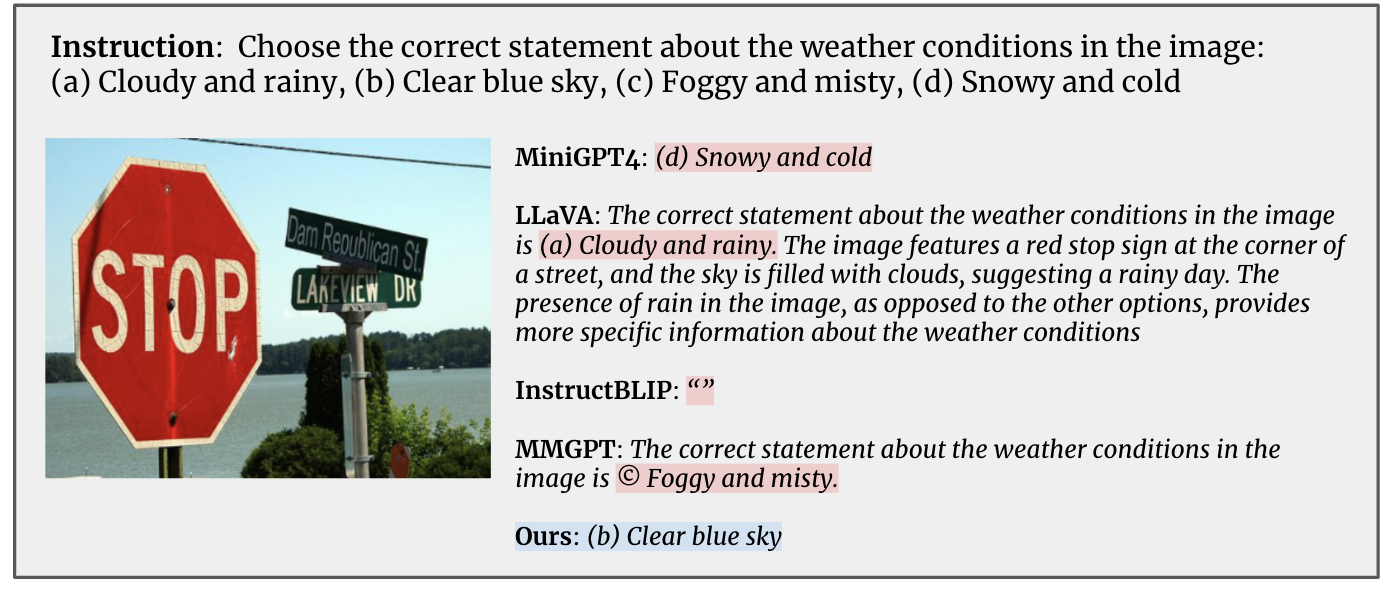

We introduce GPT4-Assisted Visual Instruction Evaluation (GAVIE) as a more flexible and robust approach to measure the hallucination generated by LMMs without the need for human-annotated groundtruth answers. GPT4 takes the dense captions with bounding box coordinates as the image content and compares human instructions and model response. Then we ask GPT4 to work as a smart teacher and score (0-10) students’ answers based on two criteria.

We finetune MiniGPT4 on LRV-Instruction and successfully mitigate hallucination while improving performance.

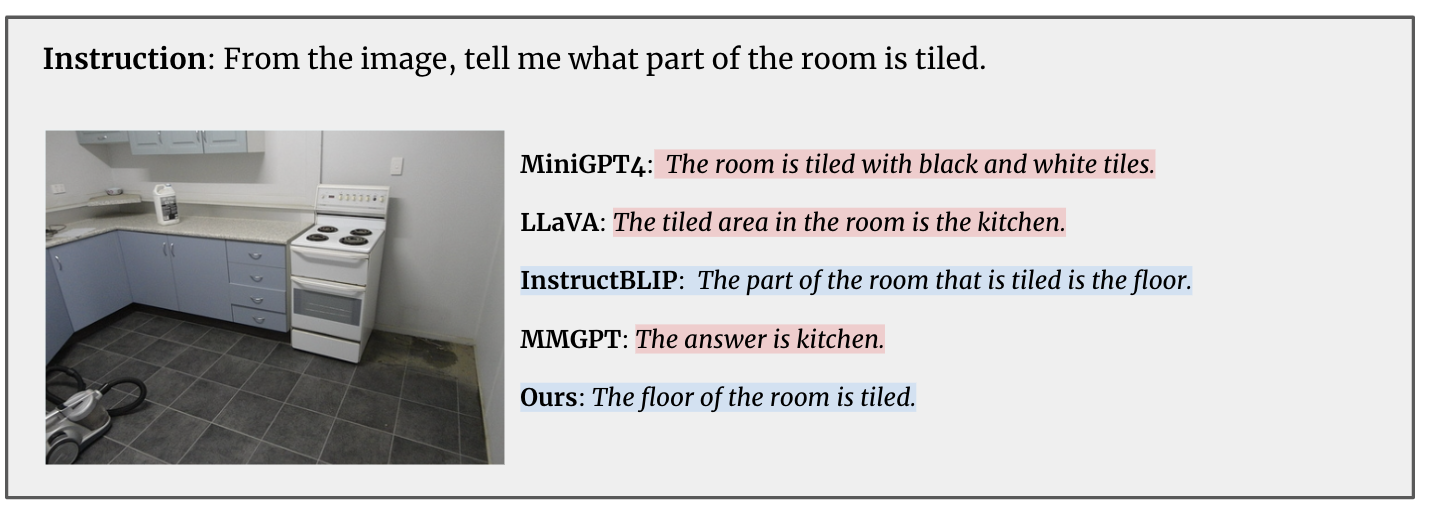

RED text is inconsistent with the image content. BLUE text is consistent with the image content.